There are benefits to using the si commands, and I'll hope to detail them in a future post, but This is where you'll first turn for Splunk summary indexing, because the docs use them as examples. I follow this approach for daily summaries of csv files, where I can split by only one or two fields, and pull out a huge amount of data.īeyond the higher level guidelines, there are a few critical technical guidelines As detailed below, adding additional data points for a set time interval (e.g., avg(val) max(val) min(val) ) is If you're indexing a data source that contains several data points at different time intervals, grab them all.

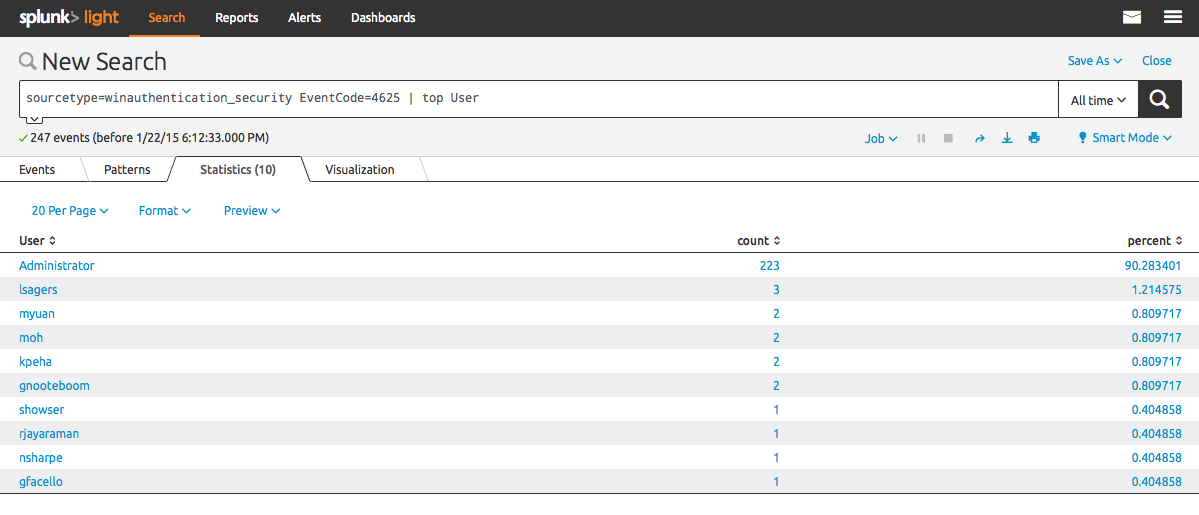

That's the report I'm tossing on the dashboard, that's all the data I'm going to be indexing. My general process is to build a summary index only when I'm finally ready to productionalizeĪn example of this would be a report on the top 10 firewall src-dst pairs for firewall denys per day. If you're indexing 5 TB of logs, this probably isn't true, so it's all the more important to really know your requirements. The good news is that it is generally pretty easy to clear and backfill a summary index - it just may Unlike the rest of Splunk, where you've got a ton of flexibility, you want your Summary Index to be as small as it can.

#SPLUNK STATS BY INDEX FULL#

There are a lot of ways to use summary indexing (and particularly the si commands) this won't provide full coverage on the topic, but it isĭefinitely a solid way to enter the powerful world of summary indexing. Summary indexing, so I decided to create an explanation of how I use it in my environment. This brought up a question about realistically, how we one should use At the recent San Francisco Splunk Meetup, there was a brief joking exchange about how the secret to using Summary Indexing was to ignore the summary index commands (sistats, etc.).

0 kommentar(er)

0 kommentar(er)